This is part one based on a transcript from ‘Legal Technology: Risk and Regulation’ video of my three-part series commenting on different views on and between technologists and lawyers in legal regulations. This first part of the series reviews existing state of legal regulations. The second part will focus on the risks we have for lawyers, and the last part of the series will be dedicated to discussing inevitable changes in technology.

The background for this series of articles is an interesting point on different views between technologists and lawyers in legal regulations.

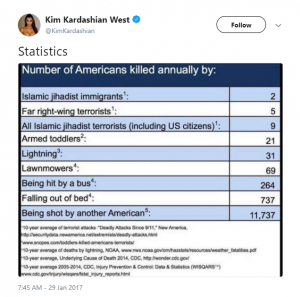

The difference between the lawnmowers and terrorists

In some recent legal regulation discussions, I used as an example of a legal technologist in regards to risk-taking. I found that amusing because I am both a Principal of Cartland Law and a practicing Tax Lawyer, and a creator of Ailira (Artificially Intelligent Legal Information Research Assistant) — so also a technologist. What I’ve noticed correctly pointed out in this discussion is that there is a difference in how these two categories treat risk. Technologists will say, “Let’s go forward: we should not have anything holding us back.” And lawyers will go: “Woah!”

But there is something else that is missing in this discussion. I think that the existing state of legal regulations in Australia is both wrong to not assist technology advancing and wrong to not pick up on its risks.

Statistics and Risk Theory

The discussion on ‘lawnmowers vs. terrorists’ started with an observation by the US Statistician General about the number of people being killed every year by terrorists and a number of people killed every year by lawnmowers. Kim Kardashian tweeted on how many people were killed by lawnmowers saying we should not be worried about terrorists because in 2017, there were nine people killed by terrorists in the US and 69 people killed by lawnmowers, and so what we want to derive from that is that lawnmowers are bigger risks than terrorists.

The statistics and risk theorist Nassim Taleb pointed out quite that correctly there is a big difference between these: You shouldn’t compare them because lawnmowers aren’t trying to kill you. Then there was written a very fascinating mathematical paper that explains this in more detail: Are lawnmowers a greater risk than terrorists?

Probability Density always is the number of times of any particular probability could be happening. There are lots of examples of them being about 50 to 100 like lawnmowers’ deaths in the US every year, and this is what we called Normal Distribution. Normal Distribution is something like about the height of the population: the smallest person in the world is 3 ½ feet, and the tallest person is 9 feet. Everyone is sort of inside that distribution. You’ll not find anyone who’s a hundred feet.

This can be contrasted with wealth, for example, which is obviously not normally distributed. There might be a lot of people of medium wealth, and there would be a lot of people with’ hundreds of billions of dollars.

Now, let’s look at terrorist attacks.

In 2017, the number of people who died from terrorist attack was 9, but the probability density is very thin, and this was a so-called Fat-Tailed Distribution. It means that there is a small likelihood of anything happening, but there is a non-zero chance of something happening. In fact, there might be nine people killed or zero people killed or thousand, or two thousand, or ten thousand, or a hundred million, and so there is a non-zero chance. (4:45)

There is a zero chance of 100 million people dying from lawnmowers, but when it comes to terrorist attacks, there could be some catastrophic attack resulting in that. Once we look at the distribution curves, we start seeing them in lots of other places.

Probability of Accidents

Let’s consider small banks. Small local banks could make a lot of bad loans and fail if they were not interconnected and raised deposits from local community. Consequently, everyone locally could lose their money. So what if it’s bigger? Well, that’s great! The large bank has a smaller chance of failure, but it has the risk of something catastrophic and systemic happening. For example, we saw during the 2008 global financial crisis that huge banks had to be bailed out by taxpayers because of complex financial instruments that they have written, and these banks were too big to fail, so there is a huge systemic risk. And it is a long-tail risk, so you might say that things are more robust every year. You’re not going to have your local bank fold again, but the entire system might collapse because of some kind of catastrophic event happening.

Same might happen with human-driven cars. If someone has a car accident, what is the maximum number of people that could be killed? Five occupants in the car that could crash into a bus? A dozen or couple dozens of people would be the maximum number of people to be killed. What about self-driven cars? people say there are not going to be the same kind of errors with them. Robots will never fall asleep; Robots always follow the rules, there will be no speeding robots, and so on.

I would say that there is instead a different risk: what if there is a centrally distributed database and that database gets corrupted, and suddenly 10,000 cars simultaneously get crushed. Tesla can overnight update its software. You’ve never thought it could be done when you bought it, which is fantastic. What if there is some fault in there and suddenly everyone’s Teslas break? There is a small or non-zero possibility of something big happening.

Read the continuation of the series for my opinion on the on the risks we have for lawyers and discussion of inevitable changes in technology.

Watch the Legal Technology: Risk and Regulation video here:

Adrian Cartland is the Creator of Ailira, the Artificial Intelligence that automates legal information and research, and the Principal of Cartland Law, a firm that specialises in devising novel solutions to complex tax, commercial, and technological, legal issues and transactions.